Beyond Instructions

: A Taxonomy of Information Types in How-to Videos

CHI 2023

(* = Equal contribution)

How-to videos are rich in information—they not only give instructions, but also provide justifications or descriptions. People seek different information to meet their needs, and identifying different types of information present in the video can improve access to the desired knowledge. Thus, we present a taxonomy of information types in how-to videos. Through an iterative open coding of 4k sentences in 48 videos, 21 information types under 8 categories emerged. The taxonomy represents diverse information types that instructors provide beyond instructions. We first show how our taxonomy can serve as an analytical framework for video navigation systems. Then, we demonstrate through a user study (n=9) how type-based navigation helps participants locate the information they needed. Finally, we discuss how the taxonomy enables a wide range of video-related tasks, such as video authoring, viewing, and analysis. To allow researchers to build upon our taxonomy, we release a dataset of 120 videos containing 9.9k sentences labelled using the taxonomy.

We selected 120 videos from the HowTo100M dataset, a large-scale dataset of narrated how-to videos. After selecting the videos, three of the authors performed an iterative open coding for the content analysis of the videos. We individually coded each sentence based on the type they believed it to be conveying. Then, we resolved each conflict through a discussion between the three authors and merged the codes every six videos. The process was repeated until convergence was reached; (1) no new types were added and (2) no types were merged or split in the last iteration. This resulted in an analysis of 48 videos to create the taxonomy.

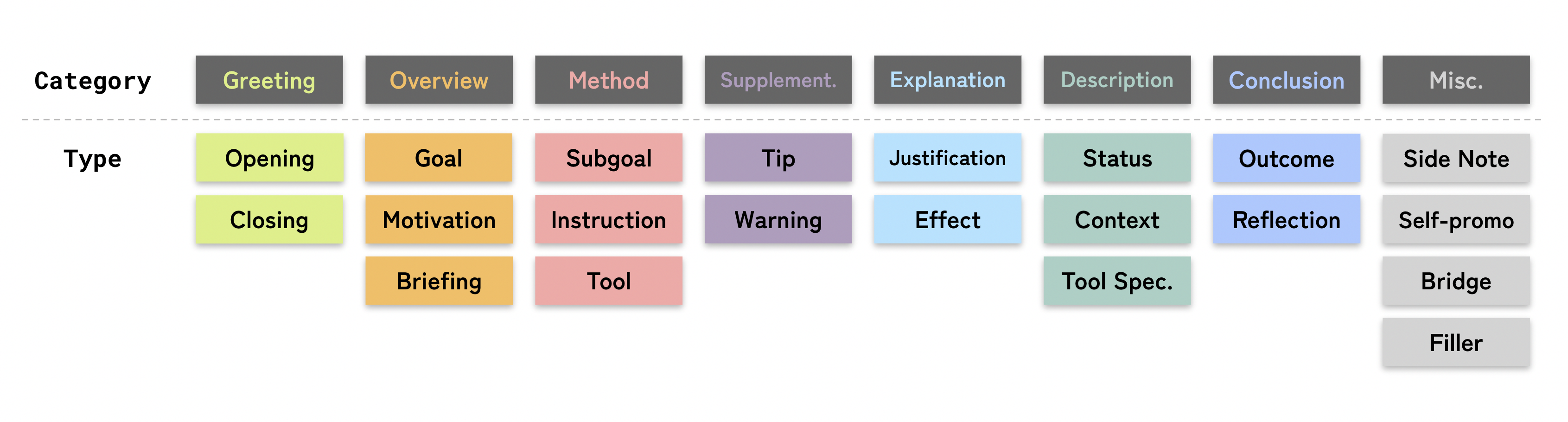

Through the iterative open coding, 21 types of information under 8 categories were identified. Please refer to the paper for more details about each information type!

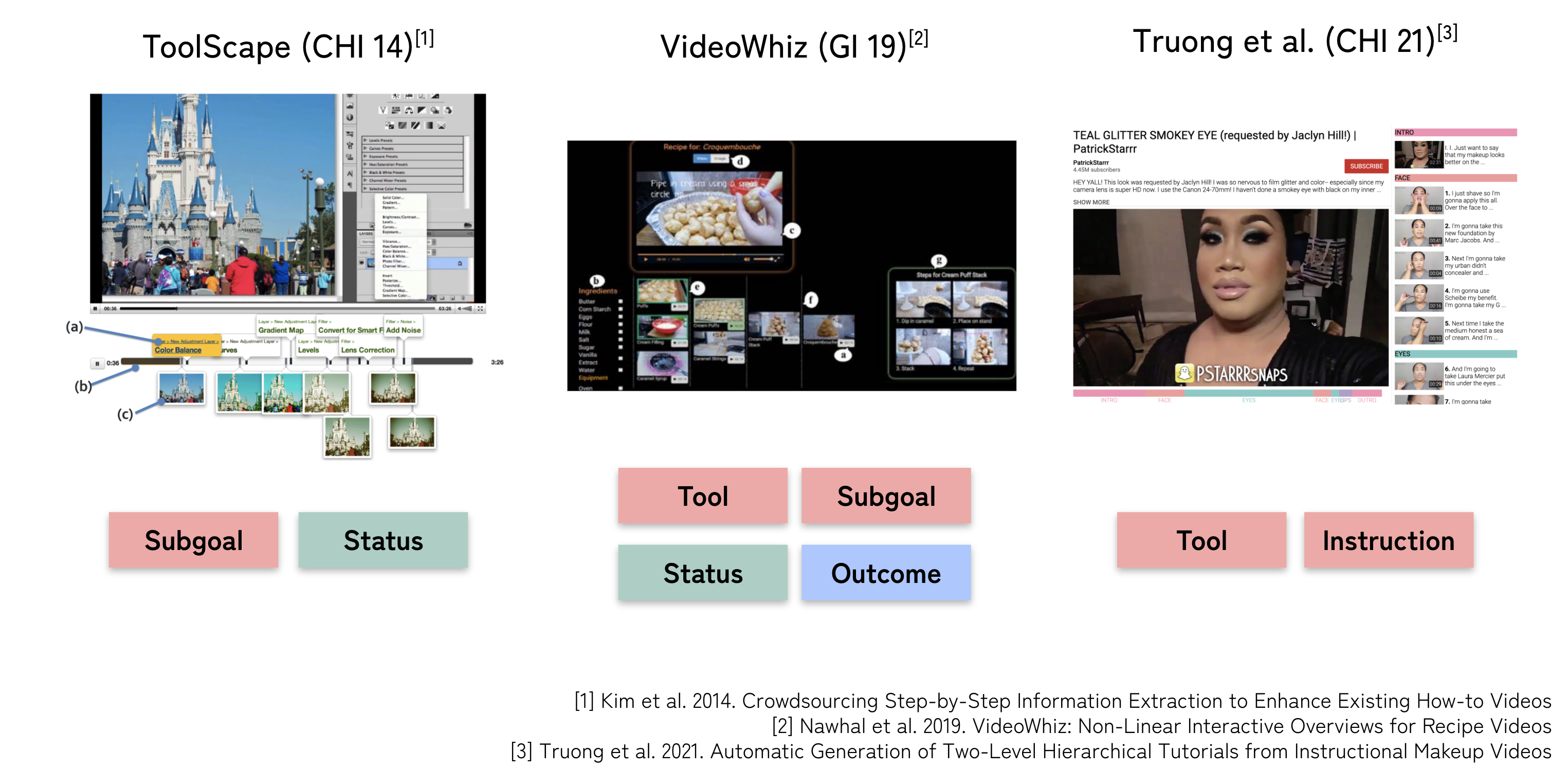

Our taxonomy provides an opportunity to analyze the information types that each of existing video navigation systems focuses on. Such an analysis can be used to identify important information types that best ft the users’ context.

We conducted an exploratory user study to investigate how users would leverage the information types for navigating videos. Results show that the participants effectively used different information types for finding information needed to perform different navigation tasks. It revealed that relevant information types can be different depending on the task, topic, and user context, and information types other than instructions can also play an important role in accessing desired information.

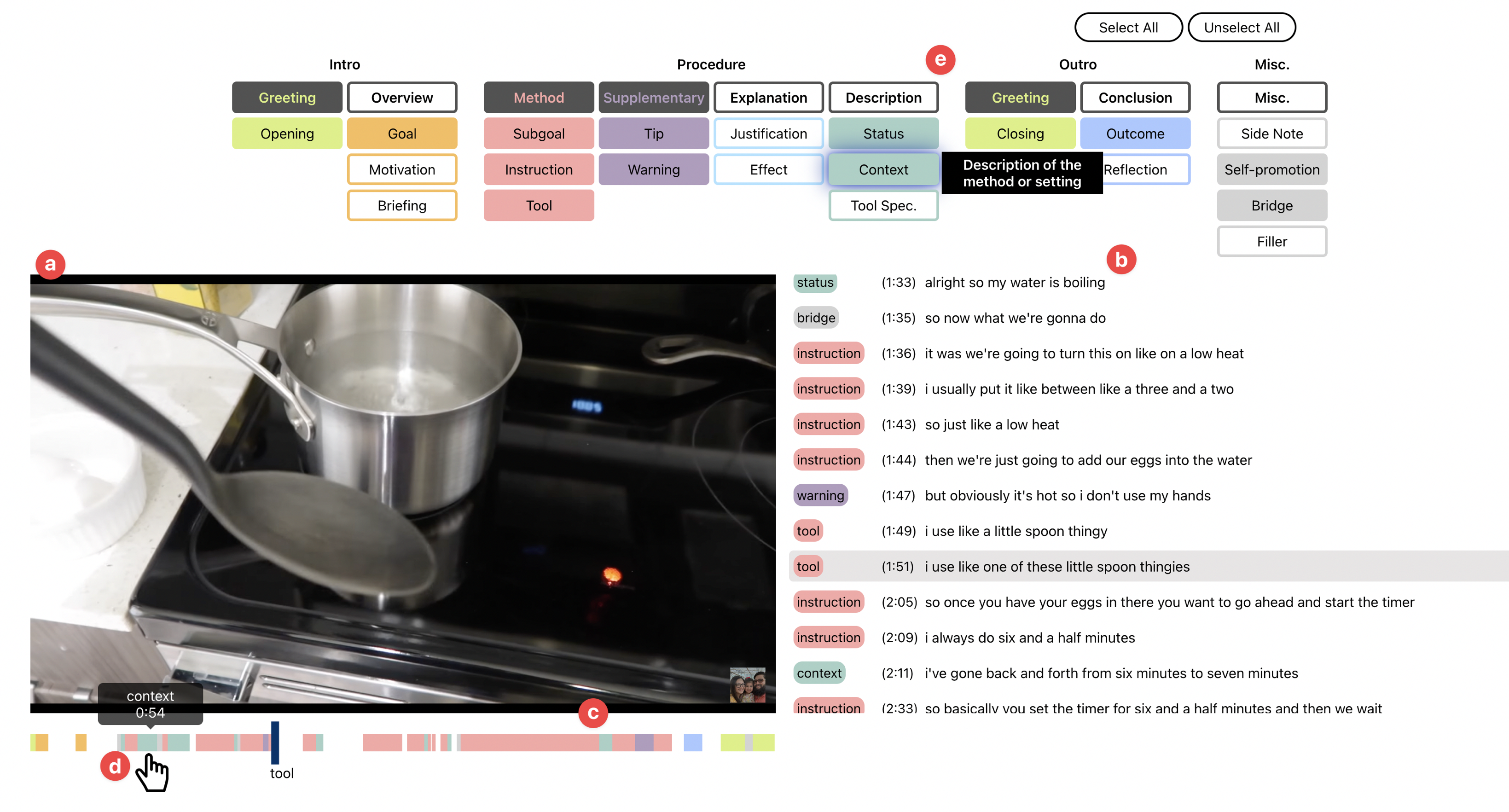

Our research probe used in the user study. (a) Users can see the video. (b) Each sentence of the script is shown with its timestamp and information type. Each type label is color-coded based on the category. (c) The same information is shown in the timeline. (d) When users hover over each segment, they can see the type and (e) its definition in the Filter panel. Users can filter segments based on their type or category in the Filter panel. Only the filtered segments are shown in the transcript panel and the timeline.

Our research probe used in the user study. (a) Users can see the video. (b) Each sentence of the script is shown with its timestamp and information type. Each type label is color-coded based on the category. (c) The same information is shown in the timeline. (d) When users hover over each segment, they can see the type and (e) its definition in the Filter panel. Users can filter segments based on their type or category in the Filter panel. Only the filtered segments are shown in the transcript panel and the timeline.

We release the dataset, HTM-Type, which contains 9.9k sentences from 120 videos with each sentence labeled according to the taxonomy. The dataset denotes for each sentence the id, publication date, duration, and genre of its video, as well as start and end time stamps, and type and category categorization.

If you have any questions about the dataset, please contact the first author.

@inproceedings{yang2023videomap,

author = {Yang, Saelyne and Kwak, Sangkyung and Lee, Juhoon and Kim, Juho},

title = {Beyond Instructions: A Taxonomy of Information Types in How-to Videos},

year = {2023},

isbn = {9781450394215},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3544548.3581126},

doi = {10.1145/3544548.3581126},

abstract = {How-to videos are rich in information—they not only give instructions but also provide justifications or descriptions. People seek different information to meet their needs, and identifying different types of information present in the video can improve access to the desired knowledge. Thus, we present a taxonomy of information types in how-to videos. Through an iterative open coding of 4k sentences in 48 videos, 21 information types under 8 categories emerged. The taxonomy represents diverse information types that instructors provide beyond instructions. We first show how our taxonomy can serve as an analytical framework for video navigation systems. Then, we demonstrate through a user study (n=9) how type-based navigation helps participants locate the information they needed. Finally, we discuss how the taxonomy enables a wide range of video-related tasks, such as video authoring, viewing, and analysis. To allow researchers to build upon our taxonomy, we release a dataset of 120 videos containing 9.9k sentences labeled using the taxonomy.},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems},

articleno = {797},

numpages = {21},

keywords = {Video Content Analysis, How-to Videos, Information Type},

location = {Hamburg, Germany},

series = {CHI '23}

}

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (NRF-2020R1C1C1007587) and the Institute of Information & Com- munications Technology Planning & Evaluation (IITP) grant funded by the Korean government (MSIT) (No.2021-0-01347, Video Inter- action Technologies Using Object-Oriented Video Modeling).